What anonymous contributors think about important life and career questions (Article)

Today we’re launching the final entry of our ‘anonymous answers' series on the website. It features answers to 23 different questions including “How have you seen talented people fail in their work?” and “What’s one way to be successful you don’t think people talk about enough?”, from anonymous people whose work we admire. We thought a lot of the responses were really interesting; some were provocative, others just surprising. And as intended, they span a very wide range of opinions. So we decided to share some highlights here with you podcast subscribers. This is only a sample though, including a few answers from just 10 of those 23 questions. You can find the rest of the answers at 80000hours.org/anonymous or follow a link here to an individual entry: 1. What's good career advice you wouldn’t want to have your name on? 2. How have you seen talented people fail in their work? 3. What’s the thing people most overrate in their career? 4. If you were at the start of your career again, what would you do differently this time? 5. If you're a talented young person how risk averse should you be? 6. Among people trying to improve the world, what are the bad habits you see most often? 7. What mistakes do people most often make when deciding what work to do? 8. What's one way to be successful you don't think people talk about enough? 9. How honest & candid should high-profile people really be? 10. What’s some underrated general life advice? 11. Should the effective altruism community grow faster or slower? And should it be broader, or narrower? 12. What are the biggest flaws of 80,000 Hours? 13. What are the biggest flaws of the effective altruism community? 14. How should the effective altruism community think about diversity? 15. Are there any myths that you feel obligated to support publicly? And five other questions. Finally, if you’d like us to produce more or less content like this, please let us know your opinion podcast@80000hours.org.

5 Kesä 202037min

#79 – A.J. Jacobs on radical honesty, following the whole Bible, and reframing global problems as puzzles

Today’s guest, New York Times bestselling author A.J. Jacobs, always hated Judge Judy. But after he found out that she was his seventh cousin, he thought, "You know what? She's not so bad." Hijacking this bias towards family and trying to broaden it to everyone led to his three-year adventure to help build the biggest family tree in history. He’s also spent months saying whatever was on his mind, tried to become the healthiest person in the world, read 33,000 pages of facts, spent a year following the Bible literally, thanked everyone involved in making his morning cup of coffee, and tried to figure out how to do the most good. His next book will ask: if we reframe global problems as puzzles, would the world be a better place? Links to learn more, summary and full transcript. This is the first time I’ve hosted the podcast, and I’m hoping to convince people to listen with this attempt at clever show notes that change style each paragraph to reference different A.J. experiments. I don’t actually think it’s that clever, but all of my other ideas seemed worse. I really have no idea how people will react to this episode; I loved it, but I definitely think I’m more entertaining than almost anyone else will. (Radical Honesty.) We do talk about some useful stuff — one of which is the concept of micro goals. When you wake up in the morning, just commit to putting on your workout clothes. Once they’re on, maybe you’ll think that you might as well get on the treadmill — just for a minute. And once you’re on for 1 minute, you’ll often stay on for 20. So I’m not asking you to commit to listening to the whole episode — just to put on your headphones. (Drop Dead Healthy.) Another reason to listen is for the facts:The Bayer aspirin company invented heroin as a cough suppressantCoriander is just the British way of saying cilantroDogs have a third eyelid to protect the eyeball from irritantsA.J. read all 44 million words of the Encyclopedia Britannica from A to Z, which drove home the idea that we know so little about the world (although he does now know that opossums have 13 nipples) (The Know-It-All.)One extra argument for listening: If you interpret the second commandment literally, then it tells you not to make a likeness of anything in heaven, on earth, or underwater — which rules out basically all images. That means no photos, no TV, no movies. So, if you want to respect the Bible, you should definitely consider making podcasts your main source of entertainment (as long as you’re not listening on the Sabbath). (The Year of Living Biblically.) I’m so thankful to A.J. for doing this. But I also want to thank Julie, Jasper, Zane and Lucas who allowed me to spend the day in their home; the construction worker who told me how to get to my subway platform on the morning of the interview; and Queen Jadwiga for making bagels popular in the 1300s, which kept me going during the recording. (Thanks a Thousand.) We also discuss: • Blackmailing yourself • The most extreme ideas A.J.’s ever considered • Doing good as a writer • And much more.Chapters:Rob’s intro (00:00:00)The interview begins (00:01:51)Puzzles (00:05:41)Radical honesty (00:12:18)The Year of Living Biblically (00:24:17)Thanks A Thousand (00:38:04)Drop Dead Healthy (00:49:22)Blackmailing yourself (00:57:46)The Know-It-All (01:03:00)Effective altruism (01:31:38)Longtermism (01:55:35)It’s All Relative (02:01:00)Journalism (02:10:06)Writing careers (02:17:15)Rob’s outro (02:34:37)Producer: Keiran HarrisAudio mastering: Ben CordellTranscriptions: Zakee Ulhaq

1 Kesä 20202h 38min

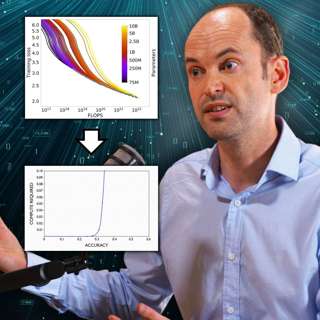

#78 – Danny Hernandez on forecasting and the drivers of AI progress

Companies use about 300,000 times more computation training the best AI systems today than they did in 2012 and algorithmic innovations have also made them 25 times more efficient at the same tasks.These are the headline results of two recent papers — AI and Compute and AI and Efficiency — from the Foresight Team at OpenAI. In today's episode I spoke with one of the authors, Danny Hernandez, who joined OpenAI after helping develop better forecasting methods at Twitch and Open Philanthropy. Danny and I talk about how to understand his team's results and what they mean (and don't mean) for how we should think about progress in AI going forward. Links to learn more, summary and full transcript. Debates around the future of AI can sometimes be pretty abstract and theoretical. Danny hopes that providing rigorous measurements of some of the inputs to AI progress so far can help us better understand what causes that progress, as well as ground debates about the future of AI in a better shared understanding of the field. If this research sounds appealing, you might be interested in applying to join OpenAI's Foresight team — they're currently hiring research engineers. In the interview, Danny and I (Arden Koehler) also discuss a range of other topics, including: • The question of which experts to believe • Danny's journey to working at OpenAI • The usefulness of "decision boundaries" • The importance of Moore's law for people who care about the long-term future • What OpenAI's Foresight Team's findings might imply for policy • The question whether progress in the performance of AI systems is linear • The safety teams at OpenAI and who they're looking to hire • One idea for finding someone to guide your learning • The importance of hardware expertise for making a positive impactChapters:Rob’s intro (00:00:00)The interview begins (00:01:29)Forecasting (00:07:11)Improving the public conversation around AI (00:14:41)Danny’s path to OpenAI (00:24:08)Calibration training (00:27:18)AI and Compute (00:45:22)AI and Efficiency (01:09:22)Safety teams at OpenAI (01:39:03)Careers (01:49:46)AI hardware as a possible path to impact (01:55:57)Triggers for people’s major decisions (02:08:44)Producer: Keiran HarrisAudio mastering: Ben CordellTranscriptions: Zakee Ulhaq

22 Touko 20202h 11min

#77 – Marc Lipsitch on whether we're winning or losing against COVID-19

In March Professor Marc Lipsitch — Director of Harvard's Center for Communicable Disease Dynamics — abruptly found himself a global celebrity, his social media following growing 40-fold and journalists knocking down his door, as everyone turned to him for information they could trust. Here he lays out where the fight against COVID-19 stands today, why he's open to deliberately giving people COVID-19 to speed up vaccine development, and how we could do better next time. As Marc tells us, island nations like Taiwan and New Zealand are successfully suppressing SARS-COV-2. But everyone else is struggling. Links to learn more, summary and full transcript. Even Singapore, with plenty of warning and one of the best test and trace systems in the world, lost control of the virus in mid-April after successfully holding back the tide for 2 months. This doesn't bode well for how the US or Europe will cope as they ease their lockdowns. It also suggests it would have been exceedingly hard for China to stop the virus before it spread overseas. But sadly, there's no easy way out. The original estimates of COVID-19's infection fatality rate, of 0.5-1%, have turned out to be basically right. And the latest serology surveys indicate only 5-10% of people in countries like the US, UK and Spain have been infected so far, leaving us far short of herd immunity. To get there, even these worst affected countries would need to endure something like ten times the number of deaths they have so far. Marc has one good piece of news: research suggests that most of those who get infected do indeed develop immunity, for a while at least. To escape the COVID-19 trap sooner rather than later, Marc recommends we go hard on all the familiar options — vaccines, antivirals, and mass testing — but also open our minds to creative options we've so far left on the shelf. Despite the importance of his work, even now the training and grant programs that produced the community of experts Marc is a part of, are shrinking. We look at a new article he's written about how to instead build and improve the field of epidemiology, so humanity can respond faster and smarter next time we face a disease that could kill millions and cost tens of trillions of dollars. We also cover: • How listeners might contribute as future contagious disease experts, or donors to current projects • How we can learn from cross-country comparisons • Modelling that has gone wrong in an instructive way • What governments should stop doing • How people can figure out who to trust, and who has been most on the mark this time • Why Marc supports infecting people with COVID-19 to speed up the development of a vaccines • How we can ensure there's population-level surveillance early during the next pandemic • Whether people from other fields trying to help with COVID-19 has done more good than harm • Whether it's experts in diseases, or experts in forecasting, who produce better disease forecasts Chapters:Rob’s intro (00:00:00)The interview begins (00:01:45)Things Rob wishes he knew about COVID-19 (00:05:23)Cross-country comparisons (00:10:53)Any government activities we should stop? (00:21:24)Lessons from COVID-19 (00:33:31)Global catastrophic biological risks (00:37:58)Human challenge trials (00:43:12)Disease surveillance (00:50:07)Who should we trust? (00:58:12)Epidemiology as a field (01:13:05)Careers (01:31:28)Producer: Keiran Harris.Audio mastering: Ben Cordell.Transcriptions: Zakee Ulhaq.

18 Touko 20201h 37min

Article: Ways people trying to do good accidentally make things worse, and how to avoid them

Today’s release is the second experiment in making audio versions of our articles. The first was a narration of Greg Lewis’ terrific problem profile on ‘Reducing global catastrophic biological risks’, which you can find on the podcast feed just before episode #74 - that is, our interview with Greg about the piece. If you want to check out the links in today’s article, you can find those here. And if you have feedback on these, positive or negative, it’d be great if you could email us at podcast@80000hours.org.

12 Touko 202026min

#76 – Tara Kirk Sell on misinformation, who's done well and badly, & what to reopen first

Amid a rising COVID-19 death toll, and looming economic disaster, we’ve been looking for good news — and one thing we're especially thankful for is the Johns Hopkins Center for Health Security (CHS). CHS focuses on protecting us from major biological, chemical or nuclear disasters, through research that informs governments around the world. While this pandemic surprised many, just last October the Center ran a simulation of a 'new coronavirus' scenario to identify weaknesses in our ability to quickly respond. Their expertise has given them a key role in figuring out how to fight COVID-19. Today’s guest, Dr Tara Kirk Sell, did her PhD in policy and communication during disease outbreaks, and has worked at CHS for 11 years on a range of important projects. • Links to learn more, summary and full transcript. Last year she was a leader on Collective Intelligence for Disease Prediction, designed to sound the alarm about upcoming pandemics before others are paying attention. Incredibly, the project almost closed in December, with COVID-19 just starting to spread around the world — but received new funding that allowed the project to respond quickly to the emerging disease. She also contributed to a recent report attempting to explain the risks of specific types of activities resuming when COVID-19 lockdowns end. We can't achieve zero risk — so differentiating activities on a spectrum is crucial. Choosing wisely can help us lead more normal lives without reviving the pandemic. Dance clubs will have to stay closed, but hairdressers can adapt to minimise transmission, and Tara, who happens to be an Olympic silver-medalist in swimming, suggests outdoor non-contact sports could resume soon without much risk. Her latest project deals with the challenge of misinformation during disease outbreaks. Analysing the Ebola communication crisis of 2014, they found that even trained coders with public health expertise sometimes needed help to distinguish between true and misleading tweets — showing the danger of a continued lack of definitive information surrounding a virus and how it’s transmitted. The challenge for governments is not simple. If they acknowledge how much they don't know, people may look elsewhere for guidance. But if they pretend to know things they don't, the result can be a huge loss of trust. Despite their intense focus on COVID-19, researchers at CHS know that this is no one-off event. Many aspects of our collective response this time around have been alarmingly poor, and it won’t be long before Tara and her colleagues need to turn their mind to next time. You can now donate to CHS through Effective Altruism Funds. Donations made through EA Funds are tax-deductible in the US, the UK, and the Netherlands. Tara and Rob also discuss: • Who has overperformed and underperformed expectations during COVID-19? • Whe are people right to mistrust authorities? • The media’s responsibility to be right • What policy changes should be prioritised for next time • Should we prepare for future pandemic while the COVID-19 is still going? • The importance of keeping non-COVID health problems in mind • The psychological difference between staying home voluntarily and being forced to • Mistakes that we in the general public might be making • Emerging technologies with the potential to reduce global catastrophic biological risks Chapters:Rob’s intro (00:00:00)The interview begins (00:01:43)Misinformation (00:05:07)Who has done well during COVID-19? (00:22:19)Guidance for governors on reopening (00:34:05)Collective Intelligence for Disease Prediction project (00:45:35)What else is CHS trying to do to address the pandemic? (00:59:51)Deaths are not the only health impact of importance (01:05:33)Policy change for future pandemics (01:10:57)Emerging technologies with potential to reduce global catastrophic biological risks (01:22:37)Careers (01:38:52)Good news about COVID-19 (01:44:23)Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

8 Touko 20201h 53min

#75 – Michelle Hutchinson on what people most often ask 80,000 Hours

Since it was founded, 80,000 Hours has done one-on-one calls to supplement our online content and offer more personalised advice. We try to help people get clear on their most plausible paths, the key uncertainties they face in choosing between them, and provide resources, pointers, and introductions to help them in those paths. I (Michelle Hutchinson) joined the team a couple of years ago after working at Oxford's Global Priorities Institute, and these days I'm 80,000 Hours' Head of Advising. Since then, chatting to hundreds of people about their career plans has given me some idea of the kinds of things it’s useful for people to hear about when thinking through their careers. So we thought it would be useful to discuss some on the show for everyone to hear. • Links to learn more, summary and full transcript. • See over 500 vacancies on our job board. • Apply for one-on-one career advising. Among other common topics, we cover: • Why traditional careers advice involves thinking through what types of roles you enjoy followed by which of those are impactful, while we recommend going the other way: ranking roles on impact, and then going down the list to find the one you think you’d most flourish in. • That if you’re pitching your job search at the right level of role, you’ll need to apply to a large number of different jobs. So it's wise to broaden your options, by applying for both stretch and backup roles, and not over-emphasising a small number of organisations. • Our suggested process for writing a longer term career plan: 1. shortlist your best medium to long-term career options, then 2. figure out the key uncertainties in choosing between them, and 3. map out concrete next steps to resolve those uncertainties. • Why many listeners aren't spending enough time finding out about what the day-to-day work is like in paths they're considering, or reaching out to people for advice or opportunities. • The difficulty of maintaining the ambition to increase your social impact, while also being proud of and motivated by what you're already accomplishing. I also thought it might be useful to give people a sense of what I do and don’t do in advising calls, to help them figure out if they should sign up for it. If you’re wondering whether you’ll benefit from advising, bear in mind that it tends to be more useful to people: 1. With similar views to 80,000 Hours on what the world’s most pressing problems are, because we’ve done most research on the problems we think it’s most important to address. 2. Who don’t yet have close connections with people working at effective altruist organisations. 3. Who aren’t strongly locationally constrained. If you’re unsure, it doesn’t take long to apply, and a lot of people say they find the application form itself helps them reflect on their plans. We’re particularly keen to hear from people from under-represented backgrounds. Also in this episode: • I describe mistakes I’ve made in advising, and career changes made by people I’ve spoken with. • Rob and I argue about what risks to take with your career, like when it’s sensible to take a study break, or start from the bottom in a new career path. • I try to forecast how I’ll change after I have a baby, Rob speculates wildly on what motherhood is like, and Arden and I mercilessly mock Rob. Chapters:Rob’s intro (00:00:00)The interview begins (00:02:50)The process of advising (00:09:34)We’re not just excited about our priority paths (00:14:37)Common things Michelle says during advising (00:18:13)Interpersonal comparisons (00:31:18)Thinking about current impact (00:40:31)Applying to different kinds of orgs (00:42:29)Difference in impact between jobs / causes (00:49:04)Common mistakes (00:55:40)Career change stories (01:11:44)When is advising really useful for people? (01:24:28)Managing risk in careers (01:55:29)Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

28 Huhti 20202h 13min

#74 – Dr Greg Lewis on COVID-19 & catastrophic biological risks

Our lives currently revolve around the global emergency of COVID-19; you’re probably reading this while confined to your house, as the death toll from the worst pandemic since 1918 continues to rise. The question of how to tackle COVID-19 has been foremost in the minds of many, including here at 80,000 Hours. Today's guest, Dr Gregory Lewis, acting head of the Biosecurity Research Group at Oxford University's Future of Humanity Institute, puts the crisis in context, explaining how COVID-19 compares to other diseases, pandemics of the past, and possible worse crises in the future. COVID-19 is a vivid reminder that we are unprepared to contain or respond to new pathogens. How would we cope with a virus that was even more contagious and even more deadly? Greg's work focuses on these risks -- of outbreaks that threaten our entire future through an unrecoverable collapse of civilisation, or even the extinction of humanity. Links to learn more, summary and full transcript. If such a catastrophe were to occur, Greg believes it’s more likely to be caused by accidental or deliberate misuse of biotechnology than by a pathogen developed by nature. There are a few direct causes for concern: humans now have the ability to produce some of the most dangerous diseases in history in the lab; technological progress may enable the creation of pathogens which are nastier than anything we see in nature; and most biotechnology has yet to even be conceived, so we can’t assume all the dangers will be familiar. This is grim stuff, but it needn’t be paralysing. In the years following COVID-19, humanity may be inspired to better prepare for the existential risks of the next century: improving our science, updating our policy options, and enhancing our social cohesion. COVID-19 is a tragedy of stunning proportions, and its immediate threat is undoubtedly worthy of significant resources. But we will get through it; if a future biological catastrophe poses an existential risk, we may not get a second chance. It is therefore vital to learn every lesson we can from this pandemic, and provide our descendants with the security we wish for ourselves. Today’s episode is the hosting debut of our Strategy Advisor, Howie Lempel. 80,000 Hours has focused on COVID-19 for the last few weeks and published over ten pieces about it, and a substantial benefit of this interview was to help inform our own views. As such, at times this episode may feel like eavesdropping on a private conversation, and it is likely to be of most interest to people primarily focused on making the long-term future go as well as possible. In this episode, Howie and Greg cover: • Reflections on the first few months of the pandemic • Common confusions around COVID-19 • How COVID-19 compares to other diseases • What types of interventions have been available to policymakers • Arguments for and against working on global catastrophic biological risks (GCBRs) • How to know if you’re a good fit to work on GCBRs • The response of the effective altruism community, as well as 80,000 Hours in particular, to COVID-19 • And much more. Chapters:Rob’s intro (00:00:00)The interview begins (00:03:15)What is COVID-19? (00:16:05)If you end up infected, how severe is it likely to be? (00:19:21)How does COVID-19 compare to other diseases? (00:25:42)Common confusions around COVID-19 (00:32:02)What types of interventions were available to policymakers? (00:46:20)Nonpharmaceutical Interventions (01:04:18)What can you do personally? (01:18:25)Reflections on the first few months of the pandemic (01:23:46)Global catastrophic biological risks (GCBRs) (01:26:17)Counterarguments to working on GCBRs (01:45:56)How do GCBRs compare to other problems? (01:49:05)Careers (01:59:50)The response of the effective altruism community to COVID-19 (02:11:42)The response of 80,000 Hours to COVID-19 (02:28:12)Get this episode by subscribing: type '80,000 Hours' into your podcasting app. Or read the linked transcript. Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

17 Huhti 20202h 37min