#7 - Julia Galef on making humanity more rational, what EA does wrong, and why Twitter isn’t all bad

The scientific revolution in the 16th century was one of the biggest societal shifts in human history, driven by the discovery of new and better methods of figuring out who was right and who was wrong. Julia Galef - a well-known writer and researcher focused on improving human judgment, especially about high stakes questions - believes that if we could again develop new techniques to predict the future, resolve disagreements and make sound decisions together, it could dramatically improve the world across the board. We brought her in to talk about her ideas. This interview complements a new detailed review of whether and how to follow Julia’s career path. Apply for personalised coaching, see what questions are asked when, and read extra resources to learn more. Julia has been host of the Rationally Speaking podcast since 2010, co-founder of the Center for Applied Rationality in 2012, and is currently working for the Open Philanthropy Project on an investigation of expert disagreements. In our conversation we ended up speaking about a wide range of topics, including: * Her research on how people can have productive intellectual disagreements. * Why she once planned to become an urban designer. * Why she doubts people are more rational than 200 years ago. * What makes her a fan of Twitter (while I think it’s dystopian). * Whether people should write more books. * Whether it’s a good idea to run a podcast, and how she grew her audience. * Why saying you don’t believe X often won’t convince people you don’t. * Why she started a PhD in economics but then stopped. * Whether she would recommend an unconventional career like her own. * Whether the incentives in the intelligence community actually support sound thinking. * Whether big institutions will actually pick up new tools for improving decision-making if they are developed. * How to start out pursuing a career in which you enhance human judgement and foresight. Get free, one-on-one career advice to help you improve judgement and decision-making We’ve helped dozens of people compare between their options, get introductions, and jobs important for the the long-run future. **If you want to work on any of the problems discussed in this episode, find out if our coaching can help you:** APPLY FOR COACHING Overview of the conversation **1m30s** So what projects are you working on at the moment? **3m50s** How are you working on the problem of expert disagreement? **6m0s** Is this the same method as the double crux process that was developed at the Center for Applied Rationality? **10m** Why did the Open Philanthropy Project decide this was a very valuable project to fund? **13m** Is the double crux process actually that effective? **14m50s** Is Facebook dangerous? **17m** What makes for a good life? Can you be mistaken about having a good life? **19m** Should more people write books? Read more...

13 Sep 20171h 14min

#6 - Toby Ord on why the long-term future matters more than anything else & what to do about it

Of all the people whose well-being we should care about, only a small fraction are alive today. The rest are members of future generations who are yet to exist. Whether they’ll be born into a world that is flourishing or disintegrating – and indeed, whether they will ever be born at all – is in large part up to us. As such, the welfare of future generations should be our number one moral concern. This conclusion holds true regardless of whether your moral framework is based on common sense, consequences, rules of ethical conduct, cooperating with others, virtuousness, keeping options open – or just a sense of wonder about the universe we find ourselves in. That’s the view of Dr Toby Ord, a philosophy Fellow at the University of Oxford and co-founder of the effective altruism community. In this episode of the 80,000 Hours Podcast Dr Ord makes the case that aiming for a positive long-term future is likely the best way to improve the world. Apply for personalised coaching, see what questions are asked when, and read extra resources to learn more. We then discuss common objections to long-termism, such as the idea that benefits to future generations are less valuable than those to people alive now, or that we can’t meaningfully benefit future generations beyond taking the usual steps to improve the present. Later the conversation turns to how individuals can and have changed the course of history, what could go wrong and why, and whether plans to colonise Mars would actually put humanity in a safer position than it is today. This episode goes deep into the most distinctive features of our advice. It’s likely the most in-depth discussion of how 80,000 Hours and the effective altruism community think about the long term future and why - and why we so often give it top priority. It’s best to subscribe, so you can listen at leisure on your phone, speed up the conversation if you like, and get notified about future episodes. You can do so by searching ‘80,000 Hours’ wherever you get your podcasts. Want to help ensure humanity has a positive future instead of destroying itself? We want to help. We’ve helped 100s of people compare between their options, get introductions, and jobs important for the the long-run future. If you want to work on any of the problems discussed in this episode, such as artificial intelligence or biosecurity, find out if our coaching can help you. Overview of the discussion 3m30s - Why is the long-term future of humanity such a big deal, and perhaps the most important issue for us to be thinking about? 9m05s - Five arguments that future generations matter 21m50s - How bad would it be if humanity went extinct or civilization collapses? 26m40s - Why do people start saying such strange things when this topic comes up? 30m30s - Are there any other reasons to prioritize thinking about the long-term future of humanity that you wanted to raise before we move to objections? 36m10s - What is this school of thought called? Read more...

6 Sep 20172h 8min

#5 - Alex Gordon-Brown on how to donate millions in your 20s working in quantitative trading

Quantitative financial trading is one of the highest paying parts of the world’s highest paying industry. 25 to 30 year olds with outstanding maths skills can earn millions a year in an obscure set of ‘quant trading’ firms, where they program computers with predefined algorithms to allow them to trade very quickly and effectively. Update: we're headhunting people for quant trading roles Want to be kept up to date about particularly promising roles we're aware of for earning to give in quantitative finance? Get notified by letting us know here. This makes it an attractive place to work for people who want to ‘earn to give’, and we know several people who are able to donate over a million dollars a year to effective charities by working in quant trading. Who are these people? What is the job like? And is there a risk that their work harms the world in other ways? Apply for personalised coaching, see what questions are asked when, and read extra resources to learn more. I spoke at length with Alexander Gordon-Brown, who has worked as a quant trader in London for the last three and a half years and donated hundreds of thousands of pounds. We covered: * What quant traders do and how much they earn. * Whether their work is beneficial or harmful for the world. * How to figure out if you’re a good personal fit for quant trading, and if so how to break into the industry. * Whether he enjoys the work and finds it motivating, and what other careers he considered. * What variety of positions are on offer, and what the culture is like in different firms. * How he decides where to donate, and whether he has persuaded his colleagues to join him. Want to earn to give for effective charities in quantitative trading? We want to help. We’ve helped dozens of people plan their earning to give careers, and put them in touch with mentors. If you want to work in quant trading, apply for our free coaching service. APPLY FOR COACHING What questions are asked when? 1m30s - What is quant trading and how much do they earn? 4m45s - How do quant trading firms manage the risks they face and avoid bankruptcy? 7m05s - Do other traders also donate to charity and has Alex convinced them? 9m45s - How do they track the performance of each trader? 13m00s - What does the daily schedule of a quant trader look like? What do you do in the morning, afternoon, etc? More...

28 Aug 20171h 45min

#4 - Howie Lempel on pandemics that kill hundreds of millions and how to stop them

What disaster is most likely to kill more than 10 million human beings in the next 20 years? Terrorism? Famine? An asteroid? Actually it’s probably a pandemic: a deadly new disease that spreads out of control. We’ve recently seen the risks with Ebola and swine flu, but they pale in comparison to the Spanish flu which killed 3% of the world’s population in 1918 to 1920. A pandemic of that scale today would kill 200 million. In this in-depth interview I speak to Howie Lempel, who spent years studying pandemic preparedness for the Open Philanthropy Project. We spend the first 20 minutes covering his work at the foundation, then discuss how bad the pandemic problem is, why it’s probably getting worse, and what can be done about it. Full transcript, apply for personalised coaching to help you work on pandemic preparedness, see what questions are asked when, and read extra resources to learn more. In the second half we go through where you personally could study and work to tackle one of the worst threats facing humanity. Want to help ensure we have no severe pandemics in the 21st century? We want to help. We’ve helped dozens of people formulate their plans, and put them in touch with academic mentors. If you want to work on pandemic preparedness safety, apply for our free coaching service. APPLY FOR COACHING 2m - What does the Open Philanthropy Project do? What’s it like to work there? 16m27s - What grants did OpenPhil make in pandemic preparedness? Did they work out? 22m56s - Why is pandemic preparedness such an important thing to work on? 31m23s - How many people could die in a global pandemic? Is Contagion a realistic movie? 37m05s - Why the risk is getting worse due to scientific discoveries 40m10s - How would dangerous pathogens get released? 45m27s - Would society collapse if a billion people die in a pandemic? 49m25s - The plague, Spanish flu, smallpox, and other historical pandemics 58m30s - How are risks affected by sloppy research security or the existence of factory farming? 1h7m30s - What's already being done? Why institutions for dealing with pandemics are really insufficient. 1h14m30s - What the World Health Organisation should do but can’t. 1h21m51s - What charities do about pandemics and why they aren’t able to fix things 1h25m50s - How long would it take to make vaccines? 1h30m40s - What does the US government do to protect Americans? It’s a mess. 1h37m20s - What kind of people do you know work on this problem and what are they doing? 1h46m30s - Are there things that we ought to be banning or technologies that we should be trying not to develop because we're just better off not having them? 1h49m35s - What kind of reforms are needed at the international level? 1h54m40s - Where should people who want to tackle this problem go to work? 1h59m50s - Are there any technologies we need to urgently develop? 2h04m20s - What about trying to stop humans from having contact with wild animals? 2h08m5s - What should people study if they're young and choosing their major; what should they do a PhD in? Where should they study, and with who? More...

23 Aug 20172h 35min

#3 - Dario Amodei on OpenAI and how AI will change the world for good and ill

Just two years ago OpenAI didn’t exist. It’s now among the most elite groups of machine learning researchers. They’re trying to make an AI that’s smarter than humans and have $1b at their disposal. Even stranger for a Silicon Valley start-up, it’s not a business, but rather a non-profit founded by Elon Musk and Sam Altman among others, to ensure the benefits of AI are distributed broadly to all of society. I did a long interview with one of its first machine learning researchers, Dr Dario Amodei, to learn about: * OpenAI’s latest plans and research progress. * His paper *Concrete Problems in AI Safety*, which outlines five specific ways machine learning algorithms can act in dangerous ways their designers don’t intend - something OpenAI has to work to avoid. * How listeners can best go about pursuing a career in machine learning and AI development themselves. Full transcript, apply for personalised coaching to work on AI safety, see what questions are asked when, and read extra resources to learn more. 1m33s - What OpenAI is doing, Dario’s research and why AI is important 13m - Why OpenAI scaled back its Universe project 15m50s - Why AI could be dangerous 24m20s - Would smarter than human AI solve most of the world’s problems? 29m - Paper on five concrete problems in AI safety 43m48s - Has OpenAI made progress? 49m30s - What this back flipping noodle can teach you about AI safety 55m30s - How someone can pursue a career in AI safety and get a job at OpenAI 1h02m30s - Where and what should people study? 1h4m15s - What other paradigms for AI are there? 1h7m55s - How do you go from studying to getting a job? What places are there to work? 1h13m30s - If there's a 17-year-old listening here what should they start reading first? 1h19m - Is this a good way to develop your broader career options? Is it a safe move? 1h21m10s - What if you’re older and haven’t studied machine learning? How do you break in? 1h24m - What about doing this work in academia? 1h26m50s - Is the work frustrating because solutions may not exist? 1h31m35s - How do we prevent a dangerous arms race? 1h36m30s - Final remarks on how to get into doing useful work in machine learning

21 Juli 20171h 38min

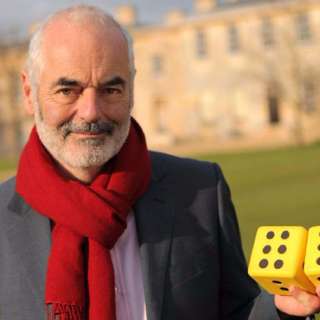

#2 - David Spiegelhalter on risk, stats and improving understanding of science

Recorded in 2015 by Robert Wiblin with colleague Jess Whittlestone at the Centre for Effective Altruism, and recovered from the dusty 80,000 Hours archives. David Spiegelhalter is a statistician at the University of Cambridge and something of an academic celebrity in the UK. Part of his role is to improve the public understanding of risk - especially everyday risks we face like getting cancer or dying in a car crash. As a result he’s regularly in the media explaining numbers in the news, trying to assist both ordinary people and politicians focus on the important risks we face, and avoid being distracted by flashy risks that don’t actually have much impact. Summary, full transcript and extra links to learn more. To help make sense of the uncertainties we face in life he has had to invent concepts like the microlife, or a 30-minute change in life expectancy. (https://en.wikipedia.org/wiki/Microlife) We wanted to learn whether he thought a lifetime of work communicating science had actually had much impact on the world, and what advice he might have for people planning their careers today.

21 Juni 201733min

#1 - Miles Brundage on the world's desperate need for AI strategists and policy experts

Robert Wiblin, Director of Research at 80,000 Hours speaks with Miles Brundage, research fellow at the University of Oxford's Future of Humanity Institute. Miles studies the social implications surrounding the development of new technologies and has a particular interest in artificial general intelligence, that is, an AI system that could do most or all of the tasks humans could do. This interview complements our profile of the importance of positively shaping artificial intelligence and our guide to careers in AI policy and strategy Full transcript, apply for personalised coaching to work on AI strategy, see what questions are asked when, and read extra resources to learn more.

5 Juni 201755min

#0 – Introducing the 80,000 Hours Podcast

80,000 Hours is a non-profit that provides research and other support to help people switch into careers that effectively tackle the world's most pressing problems. This podcast is just one of many things we offer, the others of which you can find at 80000hours.org. Since 2017 this show has been putting out interviews about the world's most pressing problems and how to solve them — which some people enjoy because they love to learn about important things, and others are using to figure out what they want to do with their careers or with their charitable giving. If you haven't yet spent a lot of time with 80,000 Hours or our general style of thinking, called effective altruism, it's probably really helpful to first go through the episodes that set the scene, explain our overall perspective on things, and generally offer all the background information you need to get the most out of the episodes we're making now. That's why we've made a new feed with ten carefully selected episodes from the show's archives, called 'Effective Altruism: An Introduction'. You can find it by searching for 'Effective Altruism' in your podcasting app or at 80000hours.org/intro. Or, if you’d rather listen on this feed, here are the ten episodes we recommend you listen to first: • #21 – Holden Karnofsky on the world's most intellectual foundation and how philanthropy can have maximum impact by taking big risks • #6 – Toby Ord on why the long-term future of humanity matters more than anything else and what we should do about it • #17 – Will MacAskill on why our descendants might view us as moral monsters • #39 – Spencer Greenberg on the scientific approach to updating your beliefs when you get new evidence • #44 – Paul Christiano on developing real solutions to the 'AI alignment problem' • #60 – What Professor Tetlock learned from 40 years studying how to predict the future • #46 – Hilary Greaves on moral cluelessness, population ethics and tackling global issues in academia • #71 – Benjamin Todd on the key ideas of 80,000 Hours • #50 – Dave Denkenberger on how we might feed all 8 billion people through a nuclear winter • 80,000 Hours Team chat #3 – Koehler and Todd on the core idea of effective altruism and how to argue for it

1 Maj 20173min