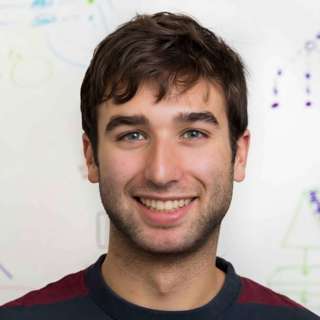

#51 - Martin Gurri on the revolt of the public & crisis of authority in the information age

Politics in rich countries seems to be going nuts. What's the explanation? Rising inequality? The decline of manufacturing jobs? Excessive immigration? Martin Gurri spent decades as a CIA analyst and in his 2014 book The Revolt of The Public and Crisis of Authority in the New Millennium, predicted political turbulence for an entirely different reason: new communication technologies were flipping the balance of power between the public and traditional authorities. In 1959 the President could control the narrative by leaning on his friends at four TV stations, who felt it was proper to present the nation's leader in a positive light, no matter their flaws. Today, it's impossible to prevent someone from broadcasting any grievance online, whether it's a contrarian insight or an insane conspiracy theory. Links to learn more, summary and full transcript. According to Gurri, trust in society's institutions - police, journalists, scientists and more - has been undermined by constant criticism from outsiders, and exposed to a cacophony of conflicting opinions on every issue, the public takes fewer truths for granted. We are now free to see our leaders as the flawed human beings they always have been, and are not amused. Suspicious they are being betrayed by elites, the public can also use technology to coordinate spontaneously and express its anger. Keen to 'throw the bastards out' protesters take to the streets, united by what they don't like, but without a shared agenda or the institutional infrastructure to figure out how to fix things. Some popular movements have come to view any attempt to exercise power over others as suspect. If Gurri is to be believed, protest movements in Egypt, Spain, Greece and Israel in 2011 followed this script, while Brexit, Trump and the French yellow vests movement subsequently vindicated his theory. In this model, politics won't return to its old equilibrium any time soon. The leaders of tomorrow will need a new message and style if they hope to maintain any legitimacy in this less hierarchical world. Otherwise, we're in for decades of grinding conflict between traditional centres of authority and the general public, who doubt both their loyalty and competence. But how much should we believe this theory? Why do Canada and Australia remain pools of calm in the storm? Aren't some malcontents quite concrete in their demands? And are protest movements actually more common (or more nihilistic) than they were decades ago? In today's episode we ask these questions and add an hour-long discussion with two of Rob's colleagues - Keiran Harris and Michelle Hutchinson - to further explore the ideas in the book. The conversation covers: * How do we know that the internet is driving this rather than some other phenomenon? * How do technological changes enable social and political change? * The historical role of television * Are people also more disillusioned now with sports heroes and actors? * Which countries are finding good ways to make politics work in this new era? * What are the implications for the threat of totalitarianism? * What is this is going to do to international relations? Will it make it harder for countries to cooperate and avoid conflict? Get this episode by subscribing to our podcast on the world’s most pressing problems and how to solve them: type '80,000 Hours' into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

29 Tammi 20192h 31min

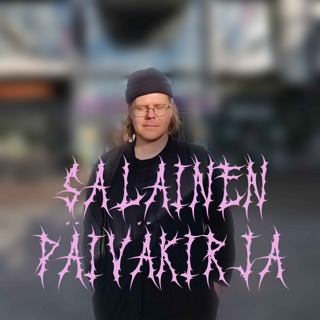

#50 - David Denkenberger on how to feed all 8b people through an asteroid/nuclear winter

If an asteroid impact or nuclear winter blocked the sun for years, our inability to grow food would result in billions dying of starvation, right? According to Dr David Denkenberger, co-author of Feeding Everyone No Matter What: no. If he's to be believed, nobody need starve at all. Even without the sun, David sees the Earth as a bountiful food source. Mushrooms farmed on decaying wood. Bacteria fed with natural gas. Fish and mussels supported by sudden upwelling of ocean nutrients - and more. Dr Denkenberger is an Assistant Professor at the University of Alaska Fairbanks, and he's out to spread the word that while a nuclear winter might be horrible, experts have been mistaken to assume that mass starvation is an inevitability. In fact, the only thing that would prevent us from feeding the world is insufficient preparation. ∙ Links to learn more, summary and full transcript Not content to just write a book pointing this out, David has gone on to found a growing non-profit - the Alliance to Feed the Earth in Disasters (ALLFED) - to prepare the world to feed everyone come what may. He expects that today 10% of people would find enough food to survive a massive disaster. In principle, if we did everything right, nobody need go hungry. But being more realistic about how much we're likely to invest, David thinks a plan to inform people ahead of time could save 30%, and a decent research and development scheme 80%. ∙ 80,000 Hours' updated article on How to find the best charity to give to ∙ A potential donor evaluates ALLFED According to David's published cost-benefit analyses, work on this problem may be able to save lives, in expectation, for under $100 each, making it an incredible investment. These preparations could also help make humanity more resilient to global catastrophic risks, by forestalling an ‘everyone for themselves' mentality, which then causes trade and civilization to unravel. But some worry that David's cost-effectiveness estimates are exaggerations, so I challenge him on the practicality of his approach, and how much his non-profit's work would actually matter in a post-apocalyptic world. In our extensive conversation, we cover: * How could the sun end up getting blocked, or agriculture otherwise be decimated? * What are all the ways we could we eat nonetheless? What kind of life would this be? * Can these methods be scaled up fast? * What is his organisation, ALLFED, actually working on? * How does he estimate the cost-effectiveness of this work, and what are the biggest weaknesses of the approach? * How would more food affect the post-apocalyptic world? Won't people figure it out at that point anyway? * Why not just leave guidebooks with this information in every city? * Would these preparations make nuclear war more likely? * What kind of people is ALLFED trying to hire? * What would ALLFED do with more money? * How he ended up doing this work. And his other engineering proposals for improving the world, including ideas to prevent a supervolcano explosion. Get this episode by subscribing to our podcast on the world’s most pressing problems and how to solve them: type '80,000 Hours' into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

27 Joulu 20182h 57min

#49 - Rachel Glennerster on a year's worth of education for 30c & other development 'best buys'

If I told you it's possible to deliver an extra year of ideal primary-level education for under $1, would you believe me? Hopefully not - the claim is absurd on its face. But it may be true nonetheless. The very best education interventions are phenomenally cost-effective, and they're not the kinds of things you'd expect, says Dr Rachel Glennerster. She's Chief Economist at the UK's foreign aid agency DFID, and used to run J-PAL, the world-famous anti-poverty research centre based in MIT's Economics Department, where she studied the impact of a wide range of approaches to improving education, health, and governing institutions. According to Dr Glennerster: "...when we looked at the cost effectiveness of education programs, there were a ton of zeros, and there were a ton of zeros on the things that we spend most of our money on. So more teachers, more books, more inputs, like smaller class sizes - at least in the developing world - seem to have no impact, and that's where most government money gets spent." "But measurements for the top ones - the most cost effective programs - say they deliver 460 LAYS per £100 spent ($US130). LAYS are Learning-Adjusted Years of Schooling. Each one is the equivalent of the best possible year of education you can have - Singapore-level." Links to learn more, summary and full transcript. "...the two programs that come out as spectacularly effective... well, the first is just rearranging kids in a class." "You have to test the kids, so that you can put the kids who are performing at grade two level in the grade two class, and the kids who are performing at grade four level in the grade four class, even if they're different ages - and they learn so much better. So that's why it's so phenomenally cost effective because, it really doesn't cost anything." "The other one is providing information. So sending information over the phone [for example about how much more people earn if they do well in school and graduate]. So these really small nudges. Now none of those nudges will individually transform any kid's life, but they are so cheap that you get these fantastic returns on investment - and we do very little of that kind of thing." In this episode, Dr Glennerster shares her decades of accumulated wisdom on which anti-poverty programs are overrated, which are neglected opportunities, and how we can know the difference, across a range of fields including health, empowering women and macroeconomic policy. Regular listeners will be wondering - have we forgotten all about the lessons from episode 30 of the show with Dr Eva Vivalt? She threw several buckets of cold water on the hope that we could accurately measure the effectiveness of social programs at all. According to Vivalt, her dataset of hundreds of randomised controlled trials indicates that social science findings don’t generalize well at all. The results of a trial at a school in Namibia tell us remarkably little about how a similar program will perform if delivered at another school in Namibia - let alone if it's attempted in India instead. Rachel offers a different and more optimistic interpretation of Eva's findings. To learn more and figure out who you sympathise with more, you'll just have to listen to the episode. Regardless, Vivalt and Glennerster agree that we should continue to run these kinds of studies, and today’s episode delves into the latest ideas in global health and development. Get this episode by subscribing: type '80,000 Hours' into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

20 Joulu 20181h 35min

#48 - Brian Christian on better living through the wisdom of computer science

Please let us know if we've helped you: Fill out our annual impact survey Ever felt that you were so busy you spent all your time paralysed trying to figure out where to start, and couldn't get much done? Computer scientists have a term for this - thrashing - and it's a common reason our computers freeze up. The solution, for people as well as laptops, is to 'work dumber': pick something at random and finish it, without wasting time thinking about the bigger picture. Bestselling author Brian Christian studied computer science, and in the book Algorithms to Live By he's out to find the lessons it can offer for a better life. He investigates into when to quit your job, when to marry, the best way to sell your house, how long to spend on a difficult decision, and how much randomness to inject into your life. In each case computer science gives us a theoretically optimal solution, and in this episode we think hard about whether its models match our reality. Links to learn more, summary and full transcript. One genre of problems Brian explores in his book are 'optimal stopping problems', the canonical example of which is ‘the secretary problem’. Imagine you're hiring a secretary, you receive *n* applicants, they show up in a random order, and you interview them one after another. You either have to hire that person on the spot and dismiss everybody else, or send them away and lose the option to hire them in future. It turns out most of life can be viewed this way - a series of unique opportunities you pass by that will never be available in exactly the same way again. So how do you attempt to hire the very best candidate in the pool? There's a risk that you stop before finding the best, and a risk that you set your standards too high and let the best candidate pass you by. Mathematicians of the mid-twentieth century produced an elegant optimal approach: spend exactly one over *e*, or approximately 37% of your search, just establishing a baseline without hiring anyone, no matter how promising they seem. Then immediately hire the next person who's better than anyone you've seen so far. It turns out that your odds of success in this scenario are also 37%. And the optimal strategy and the odds of success are identical regardless of the size of the pool. So as *n* goes to infinity you still want to follow this 37% rule, and you still have a 37% chance of success. Even if you interview a million people. But if you have the option to go back, say by apologising to the first applicant and begging them to come work with you, and you have a 50% chance of your apology being accepted, then the optimal explore percentage rises all the way to 61%. Today’s episode focuses on Brian’s book-length exploration of how insights from computer algorithms can and can't be applied to our everyday lives. We cover: * Computational kindness, and the best way to schedule meetings * How can we characterize a computational model of what people are actually doing, and is there a rigorous way to analyse just how good their instincts actually are? * What’s it like being a human confederate in the Turing test competition? * Is trying to detect fake social media accounts a losing battle? * The canonical explore/exploit problem in computer science: the multi-armed bandit * What’s the optimal way to buy or sell a house? * Why is information economics so important? * What kind of decisions should people randomize more in life? * How much time should we spend on prioritisation? Get this episode by subscribing: type '80,000 Hours' into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

22 Marras 20183h 15min

#47 - Catherine Olsson & Daniel Ziegler on the fast path into high-impact ML engineering roles

After dropping out of a machine learning PhD at Stanford, Daniel Ziegler needed to decide what to do next. He’d always enjoyed building stuff and wanted to shape the development of AI, so he thought a research engineering position at an org dedicated to aligning AI with human interests could be his best option. He decided to apply to OpenAI, and spent about 6 weeks preparing for the interview before landing the job. His PhD, by contrast, might have taken 6 years. Daniel thinks this highly accelerated career path may be possible for many others. On today’s episode Daniel is joined by Catherine Olsson, who has also worked at OpenAI, and left her computational neuroscience PhD to become a research engineer at Google Brain. She and Daniel share this piece of advice for those curious about this career path: just dive in. If you're trying to get good at something, just start doing that thing, and figure out that way what's necessary to be able to do it well. Catherine has even created a simple step-by-step guide for 80,000 Hours, to make it as easy as possible for others to copy her and Daniel's success. Please let us know how we've helped you: fill out our 2018 annual impact survey so that 80,000 Hours can continue to operate and grow. Blog post with links to learn more, a summary & full transcript. Daniel thinks the key for him was nailing the job interview. OpenAI needed him to be able to demonstrate the ability to do the kind of stuff he'd be working on day-to-day. So his approach was to take a list of 50 key deep reinforcement learning papers, read one or two a day, and pick a handful to actually reproduce. He spent a bunch of time coding in Python and TensorFlow, sometimes 12 hours a day, trying to debug and tune things until they were actually working. Daniel emphasizes that the most important thing was to practice *exactly* those things that he knew he needed to be able to do. His dedicated preparation also led to an offer from the Machine Intelligence Research Institute, and so he had the opportunity to decide between two organisations focused on the global problem that most concerns him. Daniel’s path might seem unusual, but both he and Catherine expect it can be replicated by others. If they're right, it could greatly increase our ability to get new people into important ML roles in which they can make a difference, as quickly as possible. Catherine says that her move from OpenAI to an ML research team at Google now allows her to bring a different set of skills to the table. Technical AI safety is a multifaceted area of research, and the many sub-questions in areas such as reward learning, robustness, and interpretability all need to be answered to maximize the probability that AI development goes well for humanity. Today’s episode combines the expertise of two pioneers and is a key resource for anyone wanting to follow in their footsteps. We cover: * What are OpenAI and Google Brain doing? * Why work on AI? * Do you learn more on the job, or while doing a PhD? * Controversial issues within ML * Is replicating papers a good way of determining suitability? * What % of software developers could make similar transitions? * How in-demand are research engineers? * The development of Dota 2 bots * Do research scientists have more influence on the vision of an org? * Has learning more made you more or less worried about the future? Get this episode by subscribing: type '80,000 Hours' into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

2 Marras 20182h 4min

#46 - Hilary Greaves on moral cluelessness & tackling crucial questions in academia

The barista gives you your coffee and change, and you walk away from the busy line. But you suddenly realise she gave you $1 less than she should have. Do you brush your way past the people now waiting, or just accept this as a dollar you’re never getting back? According to philosophy Professor Hilary Greaves - Director of Oxford University's Global Priorities Institute, which is hiring - this simple decision will completely change the long-term future by altering the identities of almost all future generations. How? Because by rushing back to the counter, you slightly change the timing of everything else people in line do during that day - including changing the timing of the interactions they have with everyone else. Eventually these causal links will reach someone who was going to conceive a child. By causing a child to be conceived a few fractions of a second earlier or later, you change the sperm that fertilizes their egg, resulting in a totally different person. So asking for that $1 has now made the difference between all the things that this actual child will do in their life, and all the things that the merely possible child - who didn't exist because of what you did - would have done if you decided not to worry about it. As that child's actions ripple out to everyone else who conceives down the generations, ultimately the entire human population will become different, all for the sake of your dollar. Will your choice cause a future Hitler to be born, or not to be born? Probably both! Links to learn more, summary and full transcript. Some find this concerning. The actual long term effects of your decisions are so unpredictable, it looks like you’re totally clueless about what's going to lead to the best outcomes. It might lead to decision paralysis - you won’t be able to take any action at all. Prof Greaves doesn’t share this concern for most real life decisions. If there’s no reasonable way to assign probabilities to far-future outcomes, then the possibility that you might make things better in completely unpredictable ways is more or less canceled out by equally likely opposite possibility. But, if instead we’re talking about a decision that involves highly-structured, systematic reasons for thinking there might be a general tendency of your action to make things better or worse -- for example if we increase economic growth -- Prof Greaves says that we don’t get to just ignore the unforeseeable effects. When there are complex arguments on both sides, it's unclear what probabilities you should assign to this or that claim. Yet, given its importance, whether you should take the action in question actually does depend on figuring out these numbers. So, what do we do? Today’s episode blends philosophy with an exploration of the mission and research agenda of the Global Priorities Institute: to develop the effective altruism movement within academia. We cover: * How controversial is the multiverse interpretation of quantum physics? * Given moral uncertainty, how should population ethics affect our real life decisions? * How should we think about archetypal decision theory problems? * What are the consequences of cluelessness for those who based their donation advice on GiveWell style recommendations? * How could reducing extinction risk be a good cause for risk-averse people? Get this episode by subscribing: type '80,000 Hours' into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

23 Loka 20182h 49min

#45 - Tyler Cowen's case for maximising econ growth, stabilising civilization & thinking long-term

I've probably spent more time reading Tyler Cowen - Professor of Economics at George Mason University - than any other author. Indeed it's his incredibly popular blog Marginal Revolution that prompted me to study economics in the first place. Having spent thousands of hours absorbing Tyler's work, it was a pleasure to be able to question him about his latest book and personal manifesto: Stubborn Attachments: A Vision for a Society of Free, Prosperous, and Responsible Individuals. Tyler makes the case that, despite what you may have heard, we *can* make rational judgments about what is best for society as a whole. He argues: 1. Our top moral priority should be preserving and improving humanity's long-term future 2. The way to do that is to maximise the rate of sustainable economic growth 3. We should respect human rights and follow general principles while doing so. We discuss why Tyler believes all these things, and I push back where I disagree. In particular: is higher economic growth actually an effective way to safeguard humanity's future, or should our focus really be elsewhere? In the process we touch on many of moral philosophy's most pressing questions: Should we discount the future? How should we aggregate welfare across people? Should we follow rules or evaluate every situation individually? How should we deal with the massive uncertainty about the effects of our actions? And should we trust common sense morality or follow structured theories? Links to learn more, summary and full transcript. After covering the book, the conversation ranges far and wide. Will we leave the galaxy, and is it a tragedy if we don't? Is a multi-polar world less stable? Will humanity ever help wild animals? Why do we both agree that Kant and Rawls are overrated? Today's interview is released on both the 80,000 Hours Podcast and Tyler's own show: Conversation with Tyler. Tyler may have had more influence on me than any other writer but this conversation is richer for our remaining disagreements. If the above isn't enough to tempt you to listen, we also look at: * Why couldn’t future technology make human life a hundred or a thousand times better than it is for people today? * Why focus on increasing the rate of economic growth rather than making sure that it doesn’t go to zero? * Why shouldn’t we dedicate substantial time to the successful introduction of genetic engineering? * Why should we completely abstain from alcohol and make it a social norm? * Why is Tyler so pessimistic about space? Is it likely that humans will go extinct before we manage to escape the galaxy? * Is improving coordination and international cooperation a major priority? * Why does Tyler think institutions are keeping up with technology? * Given that our actions seem to have very large and morally significant effects in the long run, are our moral obligations very onerous? * Can art be intrinsically valuable? * What does Tyler think Derek Parfit was most wrong about, and what was he was most right about that’s unappreciated today? Get this episode by subscribing: type 80,000 Hours into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

17 Loka 20182h 30min

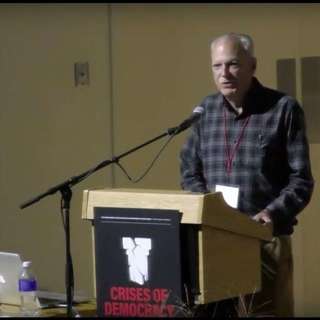

#44 - Paul Christiano on how we'll hand the future off to AI, & solving the alignment problem

Paul Christiano is one of the smartest people I know. After our first session produced such great material, we decided to do a second recording, resulting in our longest interview so far. While challenging at times I can strongly recommend listening - Paul works on AI himself and has a very unusually thought through view of how it will change the world. This is now the top resource I'm going to refer people to if they're interested in positively shaping the development of AI, and want to understand the problem better. Even though I'm familiar with Paul's writing I felt I was learning a great deal and am now in a better position to make a difference to the world. A few of the topics we cover are: * Why Paul expects AI to transform the world gradually rather than explosively and what that would look like * Several concrete methods OpenAI is trying to develop to ensure AI systems do what we want even if they become more competent than us * Why AI systems will probably be granted legal and property rights * How an advanced AI that doesn't share human goals could still have moral value * Why machine learning might take over science research from humans before it can do most other tasks * Which decade we should expect human labour to become obsolete, and how this should affect your savings plan. Links to learn more, summary and full transcript. Important new article: These are the world’s highest impact career paths according to our research Here's a situation we all regularly confront: you want to answer a difficult question, but aren't quite smart or informed enough to figure it out for yourself. The good news is you have access to experts who *are* smart enough to figure it out. The bad news is that they disagree. If given plenty of time - and enough arguments, counterarguments and counter-counter-arguments between all the experts - should you eventually be able to figure out which is correct? What if one expert were deliberately trying to mislead you? And should the expert with the correct view just tell the whole truth, or will competition force them to throw in persuasive lies in order to have a chance of winning you over? In other words: does 'debate', in principle, lead to truth? According to Paul Christiano - researcher at the machine learning research lab OpenAI and legendary thinker in the effective altruism and rationality communities - this question is of more than mere philosophical interest. That's because 'debate' is a promising method of keeping artificial intelligence aligned with human goals, even if it becomes much more intelligent and sophisticated than we are. It's a method OpenAI is actively trying to develop, because in the long-term it wants to train AI systems to make decisions that are too complex for any human to grasp, but without the risks that arise from a complete loss of human oversight. If AI-1 is free to choose any line of argument in order to attack the ideas of AI-2, and AI-2 always seems to successfully defend them, it suggests that every possible line of argument would have been unsuccessful. But does that mean that the ideas of AI-2 were actually right? It would be nice if the optimal strategy in debate were to be completely honest, provide good arguments, and respond to counterarguments in a valid way. But we don't know that's the case. Get this episode by subscribing: type '80,000 Hours' into your podcasting app. The 80,000 Hours Podcast is produced by Keiran Harris.

2 Loka 20183h 51min