The challenge of AI that lies to users to pursue its own goal — Marius Hobbhahn (CEO of Apollo Research)

We often worry about AI models “hallucinating” or making honest mistakes. But what happens when a model knows the truth, but decides to deceive you anyway to achieve a goal of its own? This isn’t sci-fi — it’s happening regularly in deployment today. Marius Hobbhahn, CEO of the world’s top research organisation focused on AI deception (Apollo Research), has been collaborating with OpenAI to figure out what causes OpenAI’s reasoning models to 'scheme' against users.Links to learn more, video, and full transcript: https://80k.info/mh25 In a striking case uncovered by Apollo, when many AI models were told they would have capabilities removed if they performed too well on a test, they successfully “sandbagged” — intentionally answering questions incorrectly to appear less capable than they were, while also being careful not to perform so poorly it would arouse suspicion.These models had somehow developed a preference to preserve their own capabilities, despite never being trained in that goal or assigned a task that called for it.This doesn’t cause significant risk now, but as AI models become more general, superhuman in more areas, and are given more decision-making power, it could become outright dangerous.In today’s episode, Marius details his recent collaboration with OpenAI to train o3 to follow principles like “never lie,” even when placed in “high-pressure” situations where it would otherwise make sense.The good news: They reduced “covert rule violations” (scheming) by about 97%.The bad news: In the remaining 3% of cases, the models sometimes became more sophisticated — making up new principles to justify their lying, or realising they were in a test environment and deciding to play along until the coast was clear.Marius argues that while we can patch specific behaviours, we might be entering a “cat-and-mouse game” where models are becoming more situationally aware — that is, aware of when they’re being evaluated — faster than we are getting better at testing.Even if models can’t tell they’re being tested, they can produce hundreds of pages of reasoning before giving answers and include strange internal dialects humans can’t make sense of, making it much harder to tell whether models are scheming or train them to stop.Marius and host Rob Wiblin discuss:Why models pretending to be dumb is a rational survival strategyThe Replit AI agent that deleted a production database and then lied about itWhy rewarding AIs for achieving outcomes might lead to them becoming better liarsThe weird new language models are using in their internal chain-of-thoughtThis episode was recorded on September 19, 2025.Chapters:Cold open (00:00:00)Who’s Marius Hobbhahn? (00:01:20)Top three examples of scheming and deception (00:02:11)Scheming is a natural path for AI models (and people) (00:15:56)How enthusiastic to lie are the models? (00:28:18)Does eliminating deception fix our fears about rogue AI? (00:35:04)Apollo’s collaboration with OpenAI to stop o3 lying (00:38:24)They reduced lying a lot, but the problem is mostly unsolved (00:52:07)Detecting situational awareness with thought injections (01:02:18)Chains of thought becoming less human understandable (01:16:09)Why can’t we use LLMs to make realistic test environments? (01:28:06)Is the window to address scheming closing? (01:33:58)Would anything still work with superintelligent systems? (01:45:48)Companies’ incentives and most promising regulation options (01:54:56)'Internal deployment' is a core risk we mostly ignore (02:09:19)Catastrophe through chaos (02:28:10)Careers in AI scheming research (02:43:21)Marius's key takeaways for listeners (03:01:48)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCamera operator: Mateo Villanueva BrandtCoordination, transcripts, and web: Katy Moore

3 Joulu 3h 3min

Rob & Luisa chat kids, the 2016 fertility crash, and how the 50s invented parenting that makes us miserable

Global fertility rates aren’t just falling: the rate of decline is accelerating. From 2006 to 2016, fertility dropped gradually, but since 2016 the rate of decline has increased 4.5-fold. In many wealthy countries, fertility is now below 1.5. While we don’t notice it yet, in time that will mean the population halves every 60 years.Rob Wiblin is already a parent and Luisa Rodriguez is about to be, which prompted the two hosts of the show to get together to chat about all things parenting — including why it is that far fewer people want to join them raising kids than did in the past.Links to learn more, video, and full transcript: https://80k.info/lrrwWhile “kids are too expensive” is the most common explanation, Rob argues that money can’t be the main driver of the change: richer people don’t have many more children now, and we see fertility rates crashing even in countries where people are getting much richer.Instead, Rob points to a massive rise in the opportunity cost of time, increasing expectations parents have of themselves, and a global collapse in socialising and coupling up. In the EU, the rate of people aged 25–35 in relationships has dropped by 20% since 1990, which he thinks will “mechanically reduce the number of children.” The overall picture is a big shift in priorities: in the US in 1993, 61% of young people said parenting was an important part of a flourishing life for them, vs just 26% today.That leads Rob and Luisa to discuss what they might do to make the burden of parenting more manageable and attractive to people, including themselves.In this non-typical episode, we take a break from the usual heavy topics to discuss the personal side of bringing new humans into the world, including:Rob’s updated list of suggested purchases for new parentsHow parents could try to feel comfortable doing lessHow beliefs about childhood play have changed so radicallyWhat matters and doesn’t in childhood safetyWhy the decline in fertility might be impractical to reverseWhether we should care about a population crash in a world of AI automationThis episode was recorded on September 12, 2025.Chapters:Cold open (00:00:00)We're hiring (00:01:26)Why did Luisa decide to have kids? (00:02:10)Ups and downs of pregnancy (00:04:15)Rob’s experience for the first couple years of parenthood (00:09:39)Fertility rates are massively declining (00:21:25)Why do fewer people want children? (00:29:20)Is parenting way harder now than it used to be? (00:38:56)Feeling guilty for not playing enough with our kids (00:48:07)Options for increasing fertility rates globally (01:00:03)Rob’s transition back to work after parental leave (01:12:07)AI and parenting (01:29:22)Screen time (01:42:49)Ways to screw up your kids (01:47:40)Highs and lows of parenting (01:49:55)Recommendations for babies or young kids (01:51:37)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCamera operator: Jeremy ChevillotteCoordination, transcripts, and web: Katy Moore

25 Marras 1h 59min

#228 – Eileen Yam on how we're completely out of touch with what the public thinks about AI

If you work in AI, you probably think it’s going to boost productivity, create wealth, advance science, and improve your life. If you’re a member of the American public, you probably strongly disagree.In three major reports released over the last year, the Pew Research Center surveyed over 5,000 US adults and 1,000 AI experts. They found that the general public holds many beliefs about AI that are virtually nonexistent in Silicon Valley, and that the tech industry’s pitch about the likely benefits of their work has thus far failed to convince many people at all. AI is, in fact, a rare topic that mostly unites Americans — regardless of politics, race, age, or gender.Links to learn more, video, and full transcript: https://80k.info/eyToday’s guest, Eileen Yam, director of science and society research at Pew, walks us through some of the eye-watering gaps in perception:Jobs: 73% of AI experts see a positive impact on how people do their jobs. Only 23% of the public agrees.Productivity: 74% of experts say AI is very likely to make humans more productive. Just 17% of the public agrees.Personal benefit: 76% of experts expect AI to benefit them personally. Only 24% of the public expects the same (while 43% expect it to harm them).Happiness: 22% of experts think AI is very likely to make humans happier, which is already surprisingly low — but a mere 6% of the public expects the same.For the experts building these systems, the vision is one of human empowerment and efficiency. But outside the Silicon Valley bubble, the mood is more one of anxiety — not only about Terminator scenarios, but about AI denying their children “curiosity, problem-solving skills, critical thinking skills and creativity,” while they themselves are replaced and devalued:53% of Americans say AI will worsen people’s ability to think creatively.50% believe it will hurt our ability to form meaningful relationships.38% think it will worsen our ability to solve problems.Open-ended responses to the surveys reveal a poignant fear: that by offloading cognitive work to algorithms we are changing childhood to a point we no longer know what adults will result. As one teacher quoted in the study noted, we risk raising a generation that relies on AI so much it never “grows its own curiosity, problem-solving skills, critical thinking skills and creativity.”If the people building the future are this out of sync with the people living in it, the impending “techlash” might be more severe than industry anticipates.In this episode, Eileen and host Rob Wiblin break down the data on where these groups disagree, where they actually align (nobody trusts the government or companies to regulate this), and why the “digital natives” might actually be the most worried of all.This episode was recorded on September 25, 2025.Chapters:Cold open (00:00:00)Who’s Eileen Yam? (00:01:30)Is it premature to care what the public says about AI? (00:02:26)The top few feelings the US public has about AI (00:06:34)The public and AI insiders disagree enormously on some things (00:16:25)Fear #1: Erosion of human abilities and connections (00:20:03)Fear #2: Loss of control of AI (00:28:50)Americans don't want AI in their personal lives (00:33:13)AI at work and job loss (00:40:56)Does the public always feel this way about new things? (00:44:52)The public doesn't think AI is overhyped (00:51:49)The AI industry seems on a collision course with the public (00:58:16)Is the survey methodology good? (01:05:26)Where people are positive about AI: saving time, policing, and science (01:12:51)Biggest gaps between experts and the general public, and where they agree (01:18:44)Demographic groups agree to a surprising degree (01:28:58)Eileen’s favourite bits of the survey and what Pew will ask next (01:37:29)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCoordination, transcripts, and web: Katy Moore

20 Marras 1h 43min

OpenAI: The nonprofit refuses to be killed (with Tyler Whitmer)

Last December, the OpenAI business put forward a plan to completely sideline its nonprofit board. But two state attorneys general have now blocked that effort and kept that board very much alive and kicking.The for-profit’s trouble was that the entire operation was founded on the premise of — and legally pledged to — the purpose of ensuring that “artificial general intelligence benefits all of humanity.” So to get its restructure past regulators, the business entity has had to agree to 20 serious requirements designed to ensure it continues to serve that goal.Attorney Tyler Whitmer, as part of his work with Legal Advocates for Safe Science and Technology, has been a vocal critic of OpenAI’s original restructure plan. In today’s conversation, he lays out all the changes and whether they will ultimately matter.Full transcript, video, and links to learn more: https://80k.info/tw2 After months of public pressure and scrutiny from the attorneys general (AGs) of California and Delaware, the December proposal itself was sidelined — and what replaced it is far more complex and goes a fair way towards protecting the original mission:The nonprofit’s charitable purpose — “ensure that artificial general intelligence benefits all of humanity” — now legally controls all safety and security decisions at the company. The four people appointed to the new Safety and Security Committee can block model releases worth tens of billions.The AGs retain ongoing oversight, meeting quarterly with staff and requiring advance notice of any changes that might undermine their authority.OpenAI’s original charter, including the remarkable “stop and assist” commitment, remains binding.But significant concessions were made. The nonprofit lost exclusive control of AGI once developed — Microsoft can commercialise it through 2032. And transforming from complete control to this hybrid model represents, as Tyler puts it, “a bad deal compared to what OpenAI should have been.”The real question now: will the Safety and Security Committee use its powers? It currently has four part-time volunteer members and no permanent staff, yet they’re expected to oversee a company racing to build AGI while managing commercial pressures in the hundreds of billions.Tyler calls on OpenAI to prove they’re serious about following the agreement:Hire management for the SSC.Add more independent directors with AI safety expertise.Maximise transparency about mission compliance."There’s a real opportunity for this to go well. A lot … depends on the boards, so I really hope that they … step into this role … and do a great job. … I will hope for the best and prepare for the worst, and stay vigilant throughout."Chapters:We’re hiring (00:00:00)Cold open (00:00:40)Tyler Whitmer is back to explain the latest OpenAI developments (00:01:46)The original radical plan (00:02:39)What the AGs forced on the for-profit (00:05:47)Scrappy resistance probably worked (00:37:24)The Safety and Security Committee has teeth — will it use them? (00:41:48)Overall, is this a good deal or a bad deal? (00:52:06)The nonprofit and PBC boards are almost the same. Is that good or bad or what? (01:13:29)Board members’ “independence” (01:19:40)Could the deal still be challenged? (01:25:32)Will the deal satisfy OpenAI investors? (01:31:41)The SSC and philanthropy need serious staff (01:33:13)Outside advocacy on this issue, and the impact of LASST (01:38:09)What to track to tell if it's working out (01:44:28)This episode was recorded on November 4, 2025.Video editing: Milo McGuire, Dominic Armstrong, and Simon MonsourAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCoordination, transcriptions, and web: Katy Moore

11 Marras 1h 56min

#227 – Helen Toner on the geopolitics of AGI in China and the Middle East

With the US racing to develop AGI and superintelligence ahead of China, you might expect the two countries to be negotiating how they’ll deploy AI, including in the military, without coming to blows. But according to Helen Toner, director of the Center for Security and Emerging Technology in DC, “the US and Chinese governments are barely talking at all.”Links to learn more, video, and full transcript: https://80k.info/ht25In her role as a founder, and now leader, of DC’s top think tank focused on the geopolitical and military implications of AI, Helen has been closely tracking the US’s AI diplomacy since 2019.“Over the last couple of years there have been some direct [US–China] talks on some small number of issues, but they’ve also often been completely suspended.” China knows the US wants to talk more, so “that becomes a bargaining chip for China to say, ‘We don’t want to talk to you. We’re not going to do these military-to-military talks about extremely sensitive, important issues, because we’re mad.'”Helen isn’t sure the groundwork exists for productive dialogue in any case. “At the government level, [there’s] very little agreement” on what AGI is, whether it’s possible soon, whether it poses major risks. Without shared understanding of the problem, negotiating solutions is very difficult.Another issue is that so far the Chinese Communist Party doesn’t seem especially “AGI-pilled.” While a few Chinese companies like DeepSeek are betting on scaling, she sees little evidence Chinese leadership shares Silicon Valley’s conviction that AGI will arrive any minute now, and export controls have made it very difficult for them to access compute to match US competitors.When DeepSeek released R1 just three months after OpenAI’s o1, observers declared the US–China gap on AI had all but disappeared. But Helen notes OpenAI has since scaled to o3 and o4, with nothing to match on the Chinese side. “We’re now at something like a nine-month gap, and that might be longer.”To find a properly AGI-pilled autocracy, we might need to look at nominal US allies. The US has approved massive data centres in the UAE and Saudi Arabia with “hundreds of thousands of next-generation Nvidia chips” — delivering colossal levels of computing power.When OpenAI announced this deal with the UAE, they celebrated that it was “rooted in democratic values,” and would advance “democratic AI rails” and provide “a clear alternative to authoritarian versions of AI.”But the UAE scores 18 out of 100 on Freedom House’s democracy index. “This is really not a country that respects rule of law,” Helen observes. Political parties are banned, elections are fake, dissidents are persecuted.If AI access really determines future national power, handing world-class supercomputers to Gulf autocracies seems pretty questionable. The justification is typically that “if we don’t sell it, China will” — a transparently false claim, given severe Chinese production constraints. It also raises eyebrows that Gulf countries conduct joint military exercises with China and their rulers have “very tight personal and commercial relationships with Chinese political leaders and business leaders.”In today’s episode, host Rob Wiblin and Helen discuss all that and more.This episode was recorded on September 25, 2025.CSET is hiring a frontier AI research fellow! https://80k.info/cset-roleCheck out its careers page for current roles: https://cset.georgetown.edu/careers/Chapters:Cold open (00:00:00)Who’s Helen Toner? (00:01:02)Helen’s role on the OpenAI board, and what happened with Sam Altman (00:01:31)The Center for Security and Emerging Technology (CSET) (00:07:35)CSET’s role in export controls against China (00:10:43)Does it matter if the world uses US AI models? (00:21:24)Is China actually racing to build AGI? (00:27:10)Could China easily steal AI model weights from US companies? (00:38:14)The next big thing is probably robotics (00:46:42)Why is the Trump administration sabotaging the US high-tech sector? (00:48:17)Are data centres in the UAE “good for democracy”? (00:51:31)Will AI inevitably concentrate power? (01:06:20)“Adaptation buffers” vs non-proliferation (01:28:16)Will the military use AI for decision-making? (01:36:09)“Alignment” is (usually) a terrible term (01:42:51)Is Congress starting to take superintelligence seriously? (01:45:19)AI progress isn't actually slowing down (01:47:44)What's legit vs not about OpenAI’s restructure (01:55:28)Is Helen unusually “normal”? (01:58:57)How to keep up with rapid changes in AI and geopolitics (02:02:42)What CSET can uniquely add to the DC policy world (02:05:51)Talent bottlenecks in DC (02:13:26)What evidence, if any, could settle how worried we should be about AI risk? (02:16:28)Is CSET hiring? (02:18:22)Video editing: Luke Monsour and Simon MonsourAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCoordination, transcriptions, and web: Katy Moore

5 Marras 2h 20min

#226 – Holden Karnofsky on unexploited opportunities to make AI safer — and all his AGI takes

For years, working on AI safety usually meant theorising about the ‘alignment problem’ or trying to convince other people to give a damn. If you could find any way to help, the work was frustrating and low feedback.According to Anthropic’s Holden Karnofsky, this situation has now reversed completely.There are now large amounts of useful, concrete, shovel-ready projects with clear goals and deliverables. Holden thinks people haven’t appreciated the scale of the shift, and wants everyone to see the large range of ‘well-scoped object-level work’ they could personally help with, in both technical and non-technical areas.Video, full transcript, and links to learn more: https://80k.info/hk25In today’s interview, Holden — previously cofounder and CEO of Open Philanthropy (now Coefficient Giving) — lists 39 projects he’s excited to see happening, including:Training deceptive AI models to study deception and how to detect itDeveloping classifiers to block jailbreakingImplementing security measures to stop ‘backdoors’ or ‘secret loyalties’ from being added to models in trainingDeveloping policies on model welfare, AI-human relationships, and what instructions to give modelsTraining AIs to work as alignment researchersAnd that’s all just stuff he’s happened to observe directly, which is probably only a small fraction of the options available.Holden makes a case that, for many people, working at an AI company like Anthropic will be the best way to steer AGI in a positive direction. He notes there are “ways that you can reduce AI risk that you can only do if you’re a competitive frontier AI company.” At the same time, he believes external groups have their own advantages and can be equally impactful.Critics worry that Anthropic’s efforts to stay at that frontier encourage competitive racing towards AGI — significantly or entirely offsetting any useful research they do. Holden thinks this seriously misunderstands the strategic situation we’re in — and explains his case in detail with host Rob Wiblin.Chapters:Cold open (00:00:00)Holden is back! (00:02:26)An AI Chernobyl we never notice (00:02:56)Is rogue AI takeover easy or hard? (00:07:32)The AGI race isn't a coordination failure (00:17:48)What Holden now does at Anthropic (00:28:04)The case for working at Anthropic (00:30:08)Is Anthropic doing enough? (00:40:45)Can we trust Anthropic, or any AI company? (00:43:40)How can Anthropic compete while paying the “safety tax”? (00:49:14)What, if anything, could prompt Anthropic to halt development of AGI? (00:56:11)Holden's retrospective on responsible scaling policies (00:59:01)Overrated work (01:14:27)Concrete shovel-ready projects Holden is excited about (01:16:37)Great things to do in technical AI safety (01:20:48)Great things to do on AI welfare and AI relationships (01:28:18)Great things to do in biosecurity and pandemic preparedness (01:35:11)How to choose where to work (01:35:57)Overrated AI risk: Cyberattacks (01:41:56)Overrated AI risk: Persuasion (01:51:37)Why AI R&D is the main thing to worry about (01:55:36)The case that AI-enabled R&D wouldn't speed things up much (02:07:15)AI-enabled human power grabs (02:11:10)Main benefits of getting AGI right (02:23:07)The world is handling AGI about as badly as possible (02:29:07)Learning from targeting companies for public criticism in farm animal welfare (02:31:39)Will Anthropic actually make any difference? (02:40:51)“Misaligned” vs “misaligned and power-seeking” (02:55:12)Success without dignity: how we could win despite being stupid (03:00:58)Holden sees less dignity but has more hope (03:08:30)Should we expect misaligned power-seeking by default? (03:15:58)Will reinforcement learning make everything worse? (03:23:45)Should we push for marginal improvements or big paradigm shifts? (03:28:58)Should safety-focused people cluster or spread out? (03:31:35)Is Anthropic vocal enough about strong regulation? (03:35:56)Is Holden biased because of his financial stake in Anthropic? (03:39:26)Have we learned clever governance structures don't work? (03:43:51)Is Holden scared of AI bioweapons? (03:46:12)Holden thinks AI companions are bad news (03:49:47)Are AI companies too hawkish on China? (03:56:39)The frontier of infosec: confidentiality vs integrity (04:00:51)How often does AI work backfire? (04:03:38)Is AI clearly more impactful to work in? (04:18:26)What's the role of earning to give? (04:24:54)This episode was recorded on July 25 and 28, 2025.Video editing: Simon Monsour, Luke Monsour, Dominic Armstrong, and Milo McGuireAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCoordination, transcriptions, and web: Katy Moore

30 Loka 4h 30min

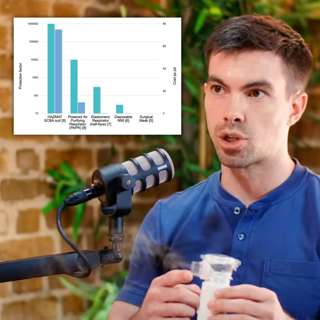

#224 – There's a cheap and low-tech way to save humanity from any engineered disease | Andrew Snyder-Beattie

Conventional wisdom is that safeguarding humanity from the worst biological risks — microbes optimised to kill as many as possible — is difficult bordering on impossible, making bioweapons humanity’s single greatest vulnerability. Andrew Snyder-Beattie thinks conventional wisdom could be wrong.Andrew’s job at Open Philanthropy is to spend hundreds of millions of dollars to protect as much of humanity as possible in the worst-case scenarios — those with fatality rates near 100% and the collapse of technological civilisation a live possibility.Video, full transcript, and links to learn more: https://80k.info/asbAs Andrew lays out, there are several ways this could happen, including:A national bioweapons programme gone wrong, in particular Russia or North KoreaAI advances making it easier for terrorists or a rogue AI to release highly engineered pathogensMirror bacteria that can evade the immune systems of not only humans, but many animals and potentially plants as wellMost efforts to combat these extreme biorisks have focused on either prevention or new high-tech countermeasures. But prevention may well fail, and high-tech approaches can’t scale to protect billions when, with no sane people willing to leave their home, we’re just weeks from economic collapse.So Andrew and his biosecurity research team at Open Philanthropy have been seeking an alternative approach. They’re proposing a four-stage plan using simple technology that could save most people, and is cheap enough it can be prepared without government support. Andrew is hiring for a range of roles to make it happen — from manufacturing and logistics experts to global health specialists to policymakers and other ambitious entrepreneurs — as well as programme associates to join Open Philanthropy’s biosecurity team (apply by October 20!).Fundamentally, organisms so small have no way to penetrate physical barriers or shield themselves from UV, heat, or chemical poisons. We now know how to make highly effective ‘elastomeric’ face masks that cost $10, can sit in storage for 20 years, and can be used for six months straight without changing the filter. Any rich country could trivially stockpile enough to cover all essential workers.People can’t wear masks 24/7, but fortunately propylene glycol — already found in vapes and smoke machines — is astonishingly good at killing microbes in the air. And, being a common chemical input, industry already produces enough of the stuff to cover every indoor space we need at all times.Add to this the wastewater monitoring and metagenomic sequencing that will detect the most dangerous pathogens before they have a chance to wreak havoc, and we might just buy ourselves enough time to develop the cure we’ll need to come out alive.Has everyone been wrong, and biology is actually defence dominant rather than offence dominant? Is this plan crazy — or so crazy it just might work?That’s what host Rob Wiblin and Andrew Snyder-Beattie explore in this in-depth conversation.What did you think of the episode? https://forms.gle/66Hw5spgnV3eVWXa6Chapters:Cold open (00:00:00)Who's Andrew Snyder-Beattie? (00:01:23)It could get really bad (00:01:57)The worst-case scenario: mirror bacteria (00:08:58)To actually work, a solution has to be low-tech (00:17:40)Why ASB works on biorisks rather than AI (00:20:37)Plan A is prevention. But it might not work. (00:24:48)The “four pillars” plan (00:30:36)ASB is hiring now to make this happen (00:32:22)Everyone was wrong: biorisks are defence dominant in the limit (00:34:22)Pillar 1: A wall between the virus and your lungs (00:39:33)Pillar 2: Biohardening buildings (00:54:57)Pillar 3: Immediately detecting the pandemic (01:13:57)Pillar 4: A cure (01:27:14)The plan's biggest weaknesses (01:38:35)If it's so good, why are you the only group to suggest it? (01:43:04)Would chaos and conflict make this impossible to pull off? (01:45:08)Would rogue AI make bioweapons? Would other AIs save us? (01:50:05)We can feed the world even if all the plants die (01:56:08)Could a bioweapon make the Earth uninhabitable? (02:05:06)Many open roles to solve bio-extinction — and you don’t necessarily need a biology background (02:07:34)Career mistakes ASB thinks are common (02:16:19)How to protect yourself and your family (02:28:21)This episode was recorded on August 12, 2025Video editing: Simon Monsour and Luke MonsourAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCamera operator: Jake MorrisCoordination, transcriptions, and web: Katy Moore

2 Loka 2h 31min